This is the first in a series of alerts that will address what businesses should consider as they navigate employment laws, data privacy and compliance challenges in deploying artificial intelligence to scale their business operations.

Introduction

Advances in artificial intelligence (AI) are transforming the workplace. Companies are increasingly turning to AI-enabled tools to attract and retain talent. While these new technologies promise efficiency and productivity, they also introduce several new complexities and considerations, from algorithm bias, disparate impact, data privacy, and discrimination concerns. We address each of these broadly below, but we intend to provide a series of alerts to cover each issue more in depth in the coming weeks and months.

The Basics: AI and Bias

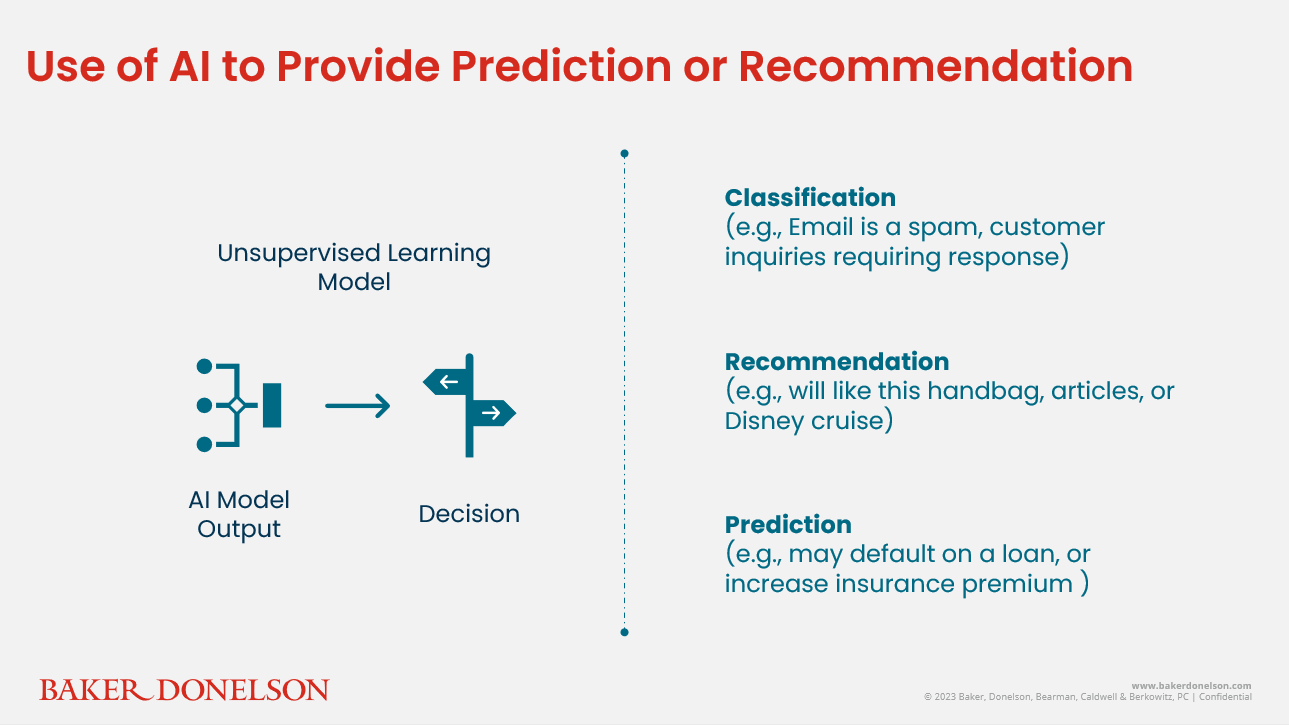

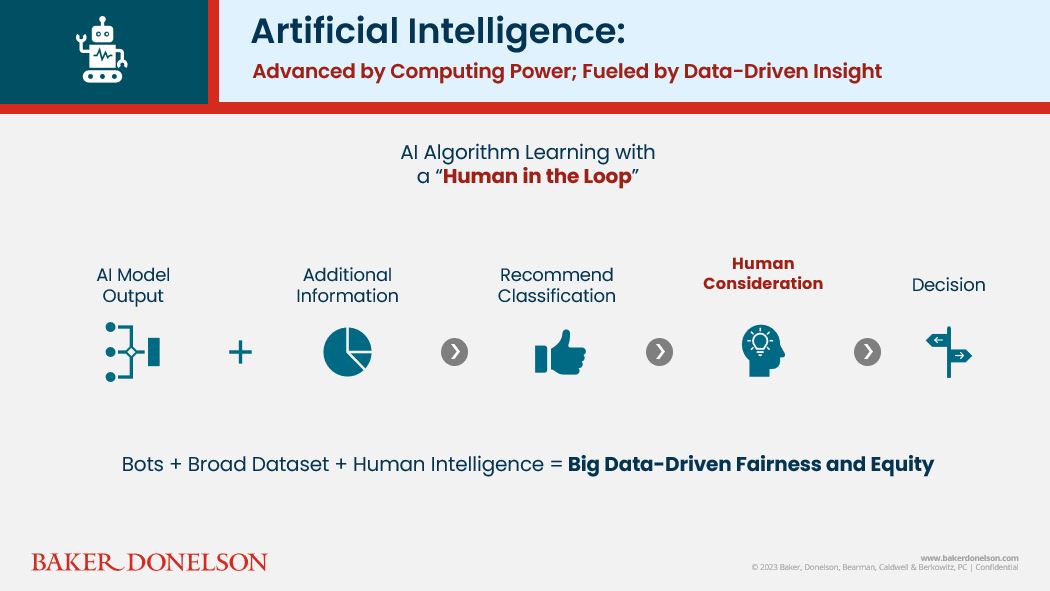

AI, at its core, refers to a range of technologies that carry out problem-solving and decision-making functions commonly associated with human thinking. The success of AI deployment is often contingent upon the scale and quality of data available to the program. For AI to mimic human thinking, an AI system is trained on a dataset and learns by identifying patterns that link inputs with outputs. Harnessed on computing power that automatically reproduces these patterns, the AI then applies these rules "learned" through training to translate new inputs received into recommendations, classifications, and, in some cases, predictions.

While AI improves efficiency and reduces costs, it can also deploy bias at scale. In many high-risk use cases, such as law enforcement, health care, and employment, the AI tool's output fails when the dataset used for algorithm training and learning reflects bias against certain social groups or genders. Instead of promoting equity and efficiency, the AI could be reenforcing bias and exponentially exposing employers to civil penalties.

State Laws on AI and Data Privacy

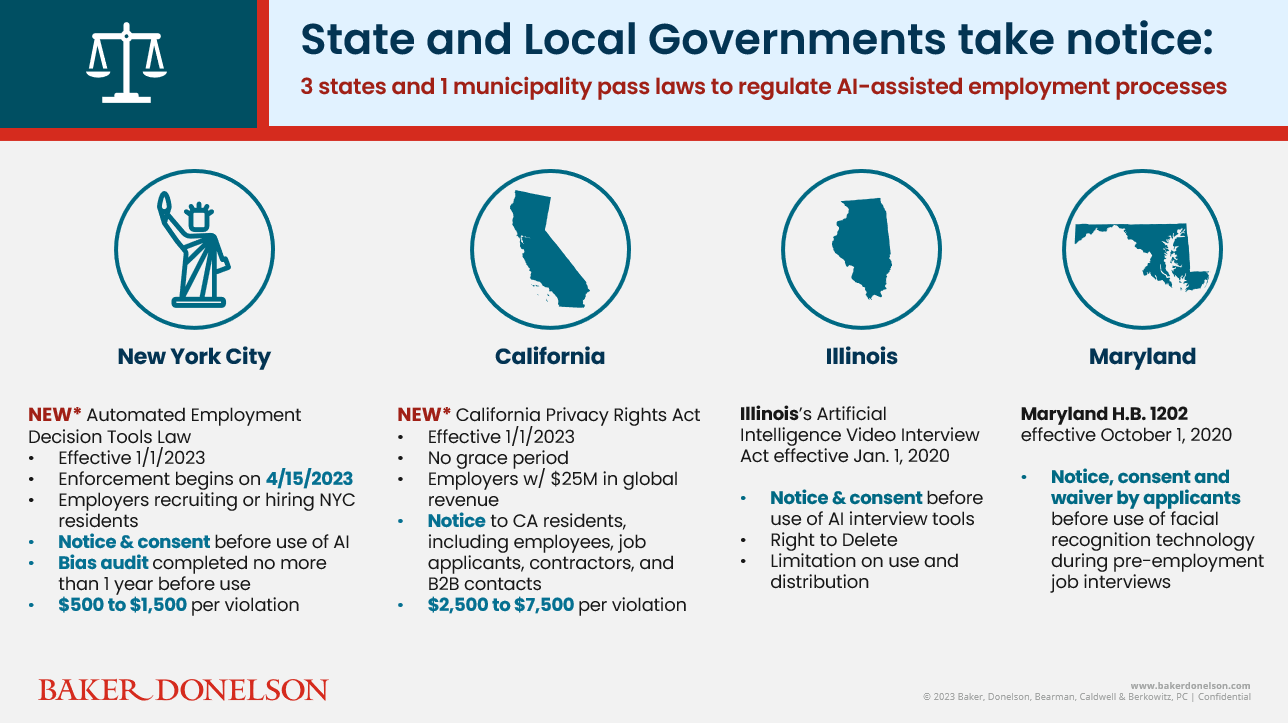

States and local governments are taking note of the proliferation of AI use throughout the employee lifecycle from recruiting, re-skilling, and retention. New York City released its latest implementation rules for the Automated Employment Decision Tools Law (AEDT Law), which took effect on January 1, 2023. Joining Illinois and Maryland, New York City became the first U.S. municipality that regulates the use of AI algorithms in employment. Employers utilizing AI in hiring must pay attention to these federal, state, and local employment and AI privacy-related laws or be vulnerable to significant liability.

In response to calls for scrutinizing emerging technologies in the hiring process, as noted above, New York City passed the AEDT Law, which took effect on January 1, 2023. With enforcement postponed until April 15, 2023, employers recruiting applicants from New York City have three months to comply with the following requirements:

- provide advance notice to applicants before using AI tools

- publish audit reports regarding AI bias by independent auditors conducted no more than one year prior to the use of the AI-enabled recruitment tools

- disclose data collection practices and data retention policies

Employers who violate the AEDT Law face steep civil fines of $500 for a first violation, and $500 – $1,500 for subsequent violations, per each affected applicant or employee.

In addition, the California Privacy Rights Act (CPRA), which also took effect on January 1, provides California employees additional rights when it comes to how employers collect, disclose, use, share, and store their personal data, which includes data collected by AI tools in hiring. More details on how the CPRA impacts HR operations is available here. The CPRA imposes penalties up to $2,500 per negligent violation or $7,500 per intentional violation.

Disparate Impact and Algorithm Bias

Federal laws also apply when employers use AI-enabled analytics tools to screen resumes and evaluate employees. For example, an AI-powered recruiting tool that screens resumes for five years of relevant industry experience could discriminate against women returning to the workforce from raising children or candidates over the age of 40. The disparate impact from the use of AI tools runs afoul of Title VII of the Civil Rights Act of 1964 and the Age Discrimination in Employment Act (ADEA), which prohibit discrimination against individuals based on race, color, national origin, sex, religion, or age. Importantly, these employment laws do not require that the discrimination be intentional for it to be unlawful. Job applicants and employees can potentially recover damages from a company that unintentionally screens them out or otherwise discriminates against them in the hiring or employment process. Accordingly, companies utilizing AI with an algorithm defect or bias can unintentionally discriminate against job applicants and nonetheless face liability.

AI Hiring Tools and Accommodating Applicants with Disabilities

Employers can potentially violate the Americans with Disabilities Act (ADA) in the hiring process by failing to provide reasonable accommodations for applicants with disabilities. If an employer's hiring procedure includes a test or assessment powered by AI, the employer should provide and inform applicants that reasonable accommodations are available. AI can also have the unintended effect of screening out applicants who need a reasonable accommodation to perform a job's functions. An example of this occurs when, though an online candidate assessment tool may not explicitly ask applicants disability-related questions on an application, the applicant may voluntarily indicate that they would not be able to perform one or more job functions. That voluntary response from the employee can cause the AI technology to eliminate them from a pool of recommended hires or qualified applicants. Employers using AI in hiring should make sure to inform disabled applicants that accommodations are available and review its procedures to eliminate any adverse impact by seemingly non-discriminatory questions or requirements.

AI Impact on Race and Gender in Hiring

Individuals with disabilities are not the only employees and applicants that are potentially being negatively impacted. AI hiring and promotion tools have also been shown to unintentionally discriminate against certain races and genders. One employer had to discontinue its use of an AI tool because the tool was screening out women in the hiring process by assigning resumes with more masculine verbs a higher rating. Aside from gender, other AI systems have also had the effect of discriminating against applicants based on race or country of origin. Employers utilizing AI should be careful to review applicant data and ensure that members of certain groups are not disproportionately eliminated from consideration.

What Else?

The negotiation of agreements with vendors offering an array of AI tools requires serious scrutiny. As our clients have asked for a review of these agreements, we have been identifying issues with these concerns not fully appreciated or recognized in the review and negotiation, especially with the complexities of a licensing agreement.

Bottom Line

The use of AI across industries has become widespread and inescapable. AI is proving to be an efficient tool to streamline the hiring process and better meet the hiring needs of companies, but it does not come without drawbacks and risks. Employers that unreflectingly follow AI-generated decisions may inadvertently reinforce biases and undermine their goals of promoting equality in the workplace. As companies continue to digitalize HR operations, they must think strategically about best practices to utilize AI-assisted tools to boost talent acquisition and retention. This may include updating HR procedures to limit potential disparate impacts, conducting due diligence on HR-tech providers, and amending current HR privacy notices to comply with state privacy or AI-related regulations.

If you have questions or would like assistance reviewing your agreements, reach out to Vivien F. Peaden, Katelyn R. Dwyer, Kayla M. Wunderlich, or any member of Baker Donelson's Labor & Employment Team or Data Protection, Privacy, and Cybersecurity Team.