Who will play the leading role if we make a new series about AI? If you interview Steven Spielberg, Sundar Pichai, and Sam Altman, you might receive three distinct ideas ranging from the 2001 classic "AI: Artificial Intelligence" to the "Succession" series on HBO. Ask members of the European Parliament, and they will likely point you to the long journey leading to the final vote to pass the EU's Artificial Intelligence Act (EU AI Act) on March 13, 2024. After much debate and negotiations since April 2021, the world's first sweeping AI regulation is finally here.

How does the EU AI Act impact U.S. Businesses that develop or use AI?

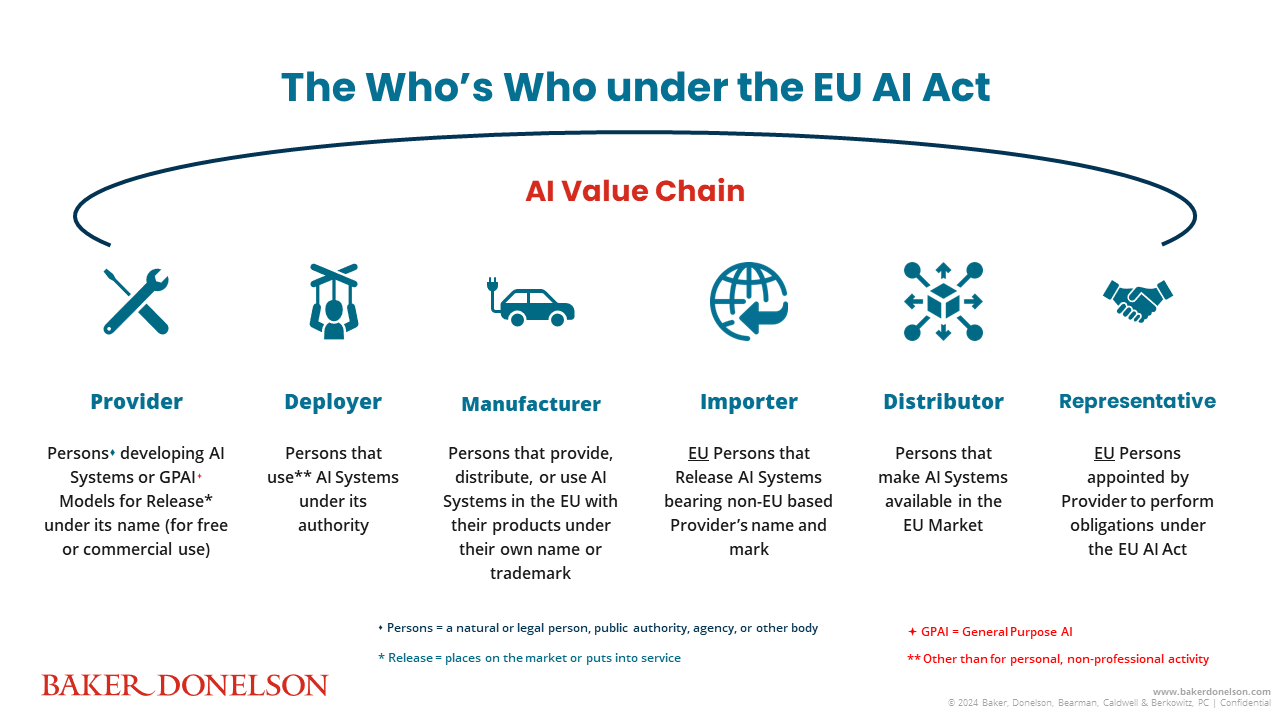

How does the EU AI Act impact U.S. Businesses that develop or use AI? The EU AI Act will have far-reaching impacts on key players within the "AI value chain," consisting of Provider, Deployer, Product Manufacturer, Importer, Distributor, and Authorized Representative. Each role carries different levels of compliance obligations from data governance, transparency, and technical documentation. Similar to the launch of the General Data Protection Regulation in 2018, the EU AI Act applies to companies across all sectors that develop or distribute AI in the EU market or those entities whose AI systems produce outputs that affect EU residents.

The EU AI Act imposes steep fines: up to € 35 million or 7 percent global turnover (whichever is greater) for providing AI systems in the EU market for prohibited AI practices, and at the greater of € 15 million or 3 percent of global turnover if a party violates its respective obligations within the AI value chain. Therefore, U.S. companies must carefully assess the role(s) they play within the AI value chain, and adapt their product design and compliance mechanism accordingly.

Which AI Systems and GPAI models are governed by the EU AI Act?

The final text of the EU AI Act broadly expands the definition of "AI System" to include any autonomous machine-based system that "infers, from the input it receives, how to generate outputs such as…content, recommendations, or decisions." In response to the advent of generative AI, the EU AI Act also introduces the new concept of the "General Purpose AI Model" or "GPAI Model." Sometimes referred to as the "foundation model," a GPAI Model can become a stand-alone system or be used as a key component of specialized AI Systems. Two well-known examples of GPAI Models are DALL-E and OpenAI's GPT-4. Since their release in 2023, both models have become critical building blocks for developers to build AI Systems with a wide range of applications.

The EU AI Act categorizes AI systems based on potential harms they may cause, including "High-Risk AI systems" used in the following sectors: critical infrastructure, employment, environment, credit scoring, election, border control, health, and the rule of law (additional context available in a previous Client Alert). As outlined below, key actors within the AI value chain will face heightened compliance requirements for their development, use, and distribution of High-Risk AI systems.

Who are the Key Actors within the AI Value Chain?

1. Provider

At the center of the AI value chain is the person who develops the AI Systems or GPAI Models under its own name or trademark, known as the "Provider." The EU AI Act applies to AI developers when their services, products, or output reach the EU market under the following scenarios:

(i) Placing AI on the market, which refers to first making available an AI System or a GPAI Model on the EU market;

(ii) Putting AI into service, which means the supply of an AI System for first use directly to the Deployer or for own use in the EU market; and

(iii) Producing AI output, which means the development of an AI System that produces output used in the EU, such as those that impact EU residents' education, employment, product safety, etc.

Providers that meet one of the three criteria must comply with the new regulations irrespective of their places of establishment and locations, and regardless of whether such distribution is for payment or free of charge. For Providers of any High-Risk AI System, the EU AI Act sets stringent compliance requirements throughout their development and implementation lifecycle. These measures include comprehensive documentation, conformity assessment, and risk management. For certain High-Risk AI Systems, the Provider must also undertake registration in an EU database before their use and distribution on the EU market.

2. Deployer

As we navigate the frontier of AI technology, a "Deployer" plays a crucial role in providing AI Systems for real-life applications. Under the EU AI Act, a "Deployer" means "any natural or legal person, public authority, agency or other body using an AI System under its authority except where the AI System is used in the course of a personal non-professional activity." The new regulations apply where a Deployer is established or located in the EU or where it operates AI Systems that produce output used in the EU.

The final text of the new regulations introduces the following requirements for Deployers that operate High-Risk AI Systems:

(i) Use and Data Governance, which requires monitoring AI Systems' operations according to their use instructions, and ensuring data inputted are appropriate for the intended purposes.

(ii) Personnel Training, which ensures all personnel handling High-Risk AI Systems are sufficiently trained and qualified.

(iii) Regulatory Compliance, which refers to the EU AI Act's requirements to comply with the EU sectoral legislations and conduct data protection impact assessment, among others.

(iv) Incident Reporting, which requires written notification to Providers, Distributors, and relevant authorities upon discovery of material malfunctioning of High-Risk AI Systems.

Finally, a Deployer could be re-classified as the "Provider" of High-Risk AI System(s) if it operates the AI Systems under the Deployer's own name and brand, or otherwise modifies the AI Systems in violation of use instructions or for unintended purposes. Similarly, an Importer or Distributor (as defined below) will also be subject to heightened compliance requirements as a result of inappropriate branding or unauthorized modifications.

3. Product Manufacturer

No longer a futuristic concept, AI innovation has seen rapid integration of AI Systems in product design and content development. The finalized EU AI Act has included "Product Manufacturers" under its scope of applicability, where they provide, distribute, or use AI Systems in the EU market together with their products and under their own name or trademark. In other words, incorporating AI Systems into product design could also subject a Product Manufacturer to the EU AI Act regardless of its establishment or location.

For example, where a U.S. auto OEM incorporates an AI system to monitor battery usage, navigate or support self-driving functionalities, and distributes the vehicle under its own name or trademark in the EU, such OEM is a "Product Manufacturer" subject to the EU AI Act. Further, where such an AI System is classified as a High-Risk AI System as a safety component of a vehicle, the OEM assumes the role of an AI Provider and applicable compliance obligations.

4. Importer

Within the AI value chain, an Importer is a gatekeeper before certain AI Systems from non-EU countries enter the EU market. Under the new regulations, an "Importer" is an EU-based legal entity or natural person that places on the EU market an AI System bearing the name or trademark of the Provider outside the EU. An Importer must also conduct rigorous due diligence and record-keeping obligations before placing a High-Risk AI System in the EU market.

These diligence efforts may include verification that a Provider has completed the conformity assessment, technical documentation, appointment of an Authorized Representative, and other requirements. An Importer must also label the High-Risk AI Systems with its own contact information and registered trademark, similar to a customs clearance process that requires information about a product's country of origin, content, and relevant disclaimers.

5. Distributor

A "Distributor" under the EU AI Act refers to any natural or legal person (other than a Provider or Importer) that provides AI Systems or GPAI Models for distribution or use on the EU market, whether for payment or free of charge. Notably, a Distributor is not required to be established or located in the EU and does not need to be the first party that releases the AI Systems or GPAI Models to the EU. As a critical link in the AI value chain, a Distributor must verify that related Providers and Importers meet certain requirements and is required to cooperate with national authorities' efforts to verify compliance. If a Distributor suspects noncompliance, it is obligated to withdraw the applicable AI System or GPAI Model from the EU market until the Provider or Importer completes the required corrective actions.

6. Authorized Representative

An "Authorized Representative" under the EU AI Act is located or established in the EU and functions as an intermediary between AI Providers outside the EU on one hand, and European authorities and consumers on the other hand. A Provider outside the EU must appoint its Authorized Representative in a written agreement (known as the mandate) to carry out certain compliance obligations and procedures on its behalf. These obligations may include verification of the Provider's compliance, submission of documentation with the national competent authority, and record retention for ten years.

What are action items for U.S.-based companies within the AI value chain?

In an increasingly interconnected world where countless products are set to include one or more AI Systems, U.S.-based companies should ask themselves the following questions:

(i) Roles. What are our roles within the AI Value Chain? Are we a Provider, Deployer, Importer, or Distributor?

(ii) Products.

- Are we a Product Manufacturer that incorporates AI Systems in our products?

- Will our products be distributed, operated, or otherwise provide output used in the EU?

(iii) Risks. Are we providing AI Systems that are classified as "Prohibited AI Systems," "High-Risk AI Systems," or "General Purpose AI built on GPAI Models"?

Summary

As we navigate the intricate new landscape laid out under the EU AI Act, each actor plays an integral role in proactively managing risks throughout the lifecycle of an AI System. Compliance with the rigorous requirements under the new regulations will become a shared responsibility across the AI value chain. Considering the EU market's size and strategic values, U.S.-based companies cannot afford to delay complying with the EU AI Act or risk losing their market share.

To steal a line from a popular adage, AI won't replace your products, but products with AI governance will replace those without it.

For more information or assistance on this topic, please contact Vivien Peaden, CIPP/US, CIPP/E, CIPM, PLS, or a member of Baker Donelson's AI Team.

Belana H. Knossalla, a current law student at the University of Göttingen, contributed to this alert.